Cursor IDE offers an extensive variety of AI models that power its intelligent features. Understanding the differences between these models, their capabilities, and when to use each one can significantly enhance your productivity and workflow. This guide will help you navigate Cursor's AI model options and make informed decisions based on your specific needs.

Understanding Cursor's Pricing Structure

Cursor offers two distinct modes of operation with different pricing models:

Normal Mode

In Normal Mode, each message costs a fixed number of requests based solely on the model you're using, regardless of context. Cursor optimizes context management without affecting your request count.

- Cost: Fixed requests per message (varies by model)

- Context: Optimized automatically by Cursor

- Best for: Everyday coding tasks and routine development work

- Free Options: Several models offer free usage (Cursor Small, Deepseek models, GPT-4o mini)

Max Mode

Max Mode provides enhanced AI capabilities with larger context windows and more tool calls, designed for complex tasks requiring deeper analysis.

- Cost: Token-based pricing (model provider's API price + 20% margin)

- Context: Up to 200k-2M tokens depending on model

- Tool calls: Up to 200 tool calls without continuation prompts

- Best for: Complex problems requiring extensive reasoning and analysis

- Pricing Examples:

- Input tokens: $1.25-$75 per million tokens

- Output tokens: $6-$150 per million tokens

- Cache reads: $0.25-$37.50 per million tokens

Comprehensive Model Comparison

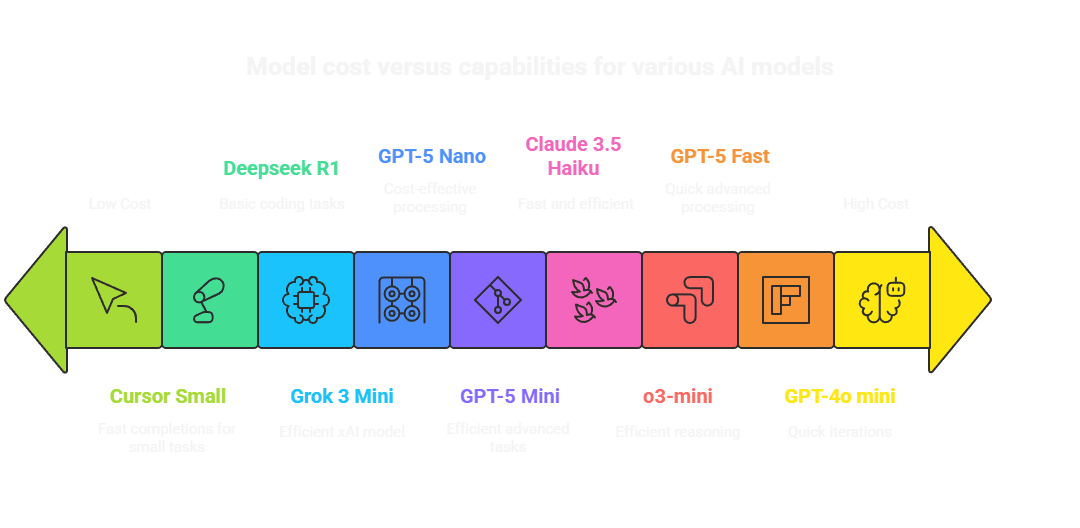

Based on the latest information from Cursor's official documentation, here are all available models:

Claude Models (Anthropic)

| Model | Mode | Context Window | Cost (Normal) | Cost (Max) | Capabilities | Best For |

|---|---|---|---|---|---|---|

| Claude 4.5 Sonnet | Normal/Max | 200k/1M | 1 request | 90/450 req/MTok | Agent, Thinking | Most advanced reasoning and coding |

| Claude 4.1 Opus | Max Only | 200k | - | 450/2250 req/MTok | Agent, Thinking | Highest-end complex reasoning |

| Claude 4 Sonnet 1M | Max Only | 1M | - | 90/450 req/MTok | Agent, Thinking | Large context processing |

| Claude 4 Sonnet | Normal/Max | 200k | 1 request | 90/450 req/MTok | Agent, Thinking | Advanced reasoning and coding |

| Claude 4 Opus | Max Only | 200k | - | 450/2250 req/MTok | Agent, Thinking | Complex reasoning tasks |

| Claude 3.7 Sonnet | Normal/Max | 200k | 1 request | 90/450 req/MTok | Agent, Thinking | Powerful, eager to make changes |

| Claude 3.5 Sonnet | Normal/Max | 200k | 1 request | 90/450 req/MTok | Agent, Thinking | Great all-rounder |

| Claude 3.5 Haiku | Normal | 60k | 0.33 requests | - | - | Fast and efficient |

OpenAI Models

| Model | Mode | Context Window | Cost (Normal) | Cost (Max) | Capabilities | Best For |

|---|---|---|---|---|---|---|

| GPT-5 | Normal | 272k | 1.25/10 $/MTok | - | Agent, Thinking | Advanced reasoning and coding |

| GPT-5 Fast | Normal | 272k | 0.75/6 $/MTok | - | Agent, Thinking | Quick advanced processing |

| GPT-5 Mini | Normal | 272k | 0.25/2 $/MTok | - | Agent | Efficient advanced tasks |

| GPT-5 Nano | Normal | 272k | 0.05/0.4 $/MTok | - | Agent | Cost-effective processing |

| GPT-5-Codex | Normal | 272k | 1/8 $/MTok | - | Agent, Thinking, Code | Advanced code generation, refactoring, and architecture design |

| GPT 4.5 Preview | Normal | 60k | 75/150 $/MTok | - | Thinking | Cutting-edge capabilities |

| GPT 4.1 | Normal/Max | 200k/1M | 2/8 $/MTok | 60/240 req/MTok | Agent | Versatile and controlled |

| GPT-4o | Normal/Max | 128k | 1/4 $/MTok | 75/300 req/MTok | Agent, Thinking | Well-rounded performance |

| GPT-4o mini | Normal | 60k | Free (500/day) | - | - | Quick iterations |

| o4-mini | Normal/Max | 200k | 1 request | 33/132 req/MTok | Agent, Thinking | High reasoning efficiency |

| o3 | Normal/Max | 200k | 1 request | 300/1200 req/MTok | Agent, Thinking | Complex reasoning challenges |

| o3-mini | Normal | 200k | 0.25 requests | - | Agent, Thinking | Efficient reasoning |

| o1 | Normal | 200k | 10 requests | - | Thinking | Mathematical tasks |

| o1 Mini | Normal | 128k | 2.5 requests | - | Thinking | Focused problem domains |

Google Models

| Model | Mode | Context Window | Cost (Normal) | Cost (Max) | Capabilities | Best For |

|---|---|---|---|---|---|---|

| Gemini 2.5 Pro | Normal/Max | 200k/1M | 1 request | 4.5/105 req/MTok | Agent, Thinking | Advanced reasoning |

| Gemini 2.5 Flash | Max Only | 1M | - | 4.5/105 req/MTok | Agent, Thinking | Large context tasks |

| Gemini 2.0 Pro (exp) | Normal | 60k | 1 request | - | Thinking | Experimental features |

Other Models

| Model | Mode | Context Window | Cost (Normal) | Cost (Max) | Capabilities | Best For |

|---|---|---|---|---|---|---|

| Cursor Small | Normal | 60k | Free | - | - | Fast completions |

| Deepseek V3.1 | Normal/Max | 60k/1M | Free | 18.75/450 req/MTok | Agent | Latest coding capabilities |

| Deepseek V3 | Normal/Max | 60k/1M | Free | 18.75/450 req/MTok | Agent | Strong coding capabilities |

| Deepseek R1 | Normal | 60k | Free | - | Agent | Basic coding tasks |

| Grok 4 | Normal | 256k | 1 request | - | Agent, Thinking | Advanced reasoning |

| Grok 4 Fast | Normal/Max | 200k/2M | 1 request | 90/450 req/MTok | Agent, Thinking | Large-scale processing |

| Grok Code | Normal | 256k | 1 request | - | Agent, Thinking | Specialized coding |

| Grok 3 Beta | Normal/Max | 132k | 1 request | 90/450 req/MTok | Agent, Thinking | Internet-aware AI |

| Grok 3 Mini | Normal/Max | 132k | Free | 9/30 req/MTok | Agent | Efficient xAI model |

| Grok 2 | Normal | 60k | 1 request | - | - | General purpose |

Understanding Model Behavior Patterns

Thinking vs Non-Thinking Models

Thinking Models (Claude 4 Sonnet, o3, etc.):

- Infer your intent and plan ahead

- Make decisions without step-by-step guidance

- Ideal for exploration, refactoring, and independent work

- Can make bigger changes than expected

Non-Thinking Models (GPT 4.1, traditional models):

- Wait for explicit instructions

- Don't infer or guess intentions

- Ideal for precise, controlled changes

- More predictable behavior

Model Assertiveness Levels

High Assertiveness (Claude 4 Sonnet, Gemini 2.5 Flash):

- Confident and make decisions with minimal prompting

- Take initiative and move quickly

- Great for rapid prototyping and exploration

Moderate Assertiveness (Claude 3.5 Sonnet, GPT-4o):

- Balanced approach to initiative

- Good for everyday coding tasks

- Reliable daily drivers

Low Assertiveness (GPT 4.1, controlled models):

- Follow instructions closely

- Require more explicit guidance

- Perfect for precise, well-defined tasks

Max Mode: Enhanced Capabilities

Max Mode unlocks the full potential of Cursor's AI models with:

Enhanced Context

- Larger context windows: Up to 1M tokens for some models

- Better file reading: Up to 750 lines per file read

- Massive codebase support: Handle entire frameworks

Advanced Tool Usage

- 200 tool calls: Without asking for continuation

- Deep analysis: Extensive code exploration

- Complex reasoning: Multi-step problem solving

Context Window Scale Examples

- 10k tokens: Small utility libraries (Underscore.js)

- 60k tokens: Medium libraries (most of Lodash)

- 120k tokens: Full libraries or framework cores

- 200k tokens: Complete web frameworks (Express)

- 1M tokens: Major framework cores (Django without tests)

Model Selection Guide

Based on Task Complexity

For Most Complex Tasks:

- Claude 4.5 Sonnet - Most advanced reasoning and 1M context

- GPT-5 - State-of-the-art capabilities

- Grok 4 Fast - Massive 2M context window

- Claude 4.1 Opus - Deep reasoning specialist

For Everyday Development:

- GPT-5-Codex - Specialized code generation and architecture

- GPT-5 Mini - Efficient advanced capabilities

- Claude 4 Sonnet - Strong reasoning and coding

- Gemini 2.5 Pro - Well-rounded performance

- Grok 4 - Advanced reasoning at good value

For Quick Tasks:

- GPT-5 Nano - Fast, cost-effective processing

- Claude 3.5 Haiku - Quick responses

- Cursor Small - Instant completions

- Deepseek R1 - Free coding assistance

Based on Working Style

If you prefer control and clear instructions:

- GPT-5 Mini (controlled power)

- Claude 4 Sonnet (non-thinking mode)

- GPT 4.1 (precise execution)

- Grok Code (specialized coding)

If you want the model to take initiative:

- Claude 4.5 Sonnet (proactive reasoning)

- GPT-5 (advanced inference)

- Gemini 2.5 Pro (balanced initiative)

- Grok 4 Fast (aggressive processing)

Based on Context Needs

Large Codebase Work:

- Grok 4 Fast (2M context)

- Gemini 2.5 Pro (1M context)

- Claude 4.5 Sonnet (1M context)

- GPT 4.1 (1M in Max Mode)

Standard Projects:

- GPT-5 family (272k context)

- Grok 4 (256k context)

- Most Normal Mode models (200k context)

- Specialized coding models (60k-128k)

Budget Considerations

Free Options

- Cursor Small: Unlimited free usage

- Deepseek Models: All free in Normal Mode (V3.1, V3, R1)

- GPT-4o mini: 500 free requests/day

- Grok 3 Mini: Free in Normal Mode

Cost-Effective Premium

- Claude 3.5 Haiku: 0.33 requests/message

- o3-mini: 0.25 requests/message

- GPT-5 Nano: $0.05/$0.40 per million tokens

- Grok 3 Mini: Free normal, 9/30 req/MTok in Max Mode

Mid-Range Options

- GPT-5 Mini: $0.25/$2.00 per million tokens

- GPT-4o: $1/$4 per million tokens

- Claude 3.7 Sonnet: 1 request/message (Normal)

High-Performance Options

- Claude 4.5 Sonnet: Premium performance with 1M context

- GPT-5: $1.25/$10 per million tokens

- GPT 4.5 Preview: $75/$150 per million tokens

- Grok 4 Fast: Large 2M context window

How to Switch Models and Enable Max Mode

Switching Models

- In Chat Panel: Use the model dropdown below the input box

- Using Cmd/Ctrl+K: Access model dropdown in command palette

- In Terminal: Press Cmd/Ctrl+K and select model

- In Settings: Go to Cursor Settings > Models

Enabling Max Mode

- Open the model picker

- Toggle "Max mode" switch

- Select a Max Mode compatible model

- Note: Requires usage-based pricing for most models

Auto-Select Feature

Enable "Auto-select model" to let Cursor choose the optimal model based on:

- Task complexity

- Model availability

- Performance requirements

- Cost considerations

When to Use Max Mode

Ideal for Max Mode:

- Complex debugging sessions

- Large codebase refactoring

- Architecture planning

- Multi-file analysis

- Deep problem exploration

Stick with Normal Mode for:

- Routine coding tasks

- Simple completions

- Quick fixes

- Well-defined changes

Privacy and Security

All models are hosted on US-based infrastructure by:

- Original model providers (Anthropic, OpenAI, etc.)

- Cursor's trusted infrastructure

- Verified partner services

With Privacy Mode enabled:

- No data storage by Cursor or providers

- Data deleted after each request

- Full request isolation

Recommendations for Different User Types

For Beginners

- Start with GPT-5 Mini or Claude 4 Sonnet

- Use Deepseek R1 for free practice

- Try Auto-select for optimal model choice

- Experiment with Cursor Small for quick tasks

For Experienced Developers

- Claude 4.5 Sonnet for complex reasoning

- GPT-5 for advanced capabilities

- Grok 4 Fast for large codebases

- Grok Code for specialized development

- Mix models based on task requirements

For Teams and Organizations

- Claude 4.5 Sonnet as primary model

- GPT-5 for critical projects

- Gemini 2.5 Pro for balanced performance

- Max Mode for complex codebases

- Usage-based pricing for flexibility

Conclusion

The AI model landscape in Cursor has expanded dramatically, offering an unprecedented range of capabilities and choices. From cost-effective options like GPT-5 Nano to powerhouse models like Claude 4.5 Sonnet and Grok 4 Fast, there's a perfect model for every development scenario and budget.

Key Takeaways:

- Select models based on task complexity, working style, and context needs

- Leverage Max Mode for complex projects requiring extensive context (up to 2M tokens)

- Utilize free and cost-effective models for routine development

- Consider GPT-5 family for modern, efficient development

- Take advantage of specialized models for specific tasks

- Auto-select provides optimal model selection based on task requirements

The future of AI-assisted development has arrived, and Cursor's comprehensive model lineup empowers developers to code faster, smarter, and more efficiently than ever before. With options ranging from free models to state-of-the-art AI capabilities, you can choose the perfect balance of performance and cost for your specific needs.